Understanding Bot Traffic

Bot traffic refers to any non-human visitors clicking your links. While this might sound concerning, most bot traffic is legitimate and serves important functions on the internet.

Why Do Bots Click Links?

Bots click links for several legitimate reasons:

- Link previews — Social platforms like Facebook, X, and LinkedIn fetch your links to generate preview cards

- Spam detection — Email providers and social networks check links to protect users from malicious content

- Search indexing — Search engines like Google crawl links to index content

- Security scanning — Anti-virus and firewall services verify link destinations

How Linkly Identifies Bots

Linkly uses two methods to detect bot traffic:

1. User Agent Detection

Many bots identify themselves through their user agent string. For example, Googlebot, Facebookbot, and Xbot all announce themselves. See our full list of detected bots.

2. ISP Detection

Some bots don't identify themselves, but we can detect them by checking if the request originates from a cloud hosting provider like AWS, Google Cloud, or DigitalOcean. Traffic from data centers is almost always automated.

Social Media Crawler Handling

Linkly can optionally exclude social media crawlers from Facebook, X, LinkedIn, Google, and YouTube from your analytics. When enabled:

- They aren't recorded in your analytics

- They don't count against your click limits

- They're always allowed through, even when bot blocking is enabled

- They can still generate custom social previews

This keeps your analytics focused on human visitors while social sharing continues to work seamlessly.

Automatically Ignored User Agents

Linkly automatically ignores traffic from certain known bot user agents. These requests are redirected normally but not recorded in analytics or counted against your click limits:

- Bytespider — ByteDance's web crawler

- python-requests — Python HTTP library commonly used for automated scripts

- curl — Command-line HTTP tool

This filtering happens automatically for all links and requires no configuration.

Is Bot Traffic Bad?

In most cases, no. The majority of bots are "good bots" performing useful functions.

However, bot traffic can be problematic when it:

- Inflates click counts — Making it harder to measure real engagement

- Skews conversion rates — Bots never convert, so high bot traffic makes conversion rates appear lower

- Consumes click limits — Though social media crawlers can be excluded from limits

For information about invalid traffic from advertising platforms, see our article on TikTok invalid traffic.

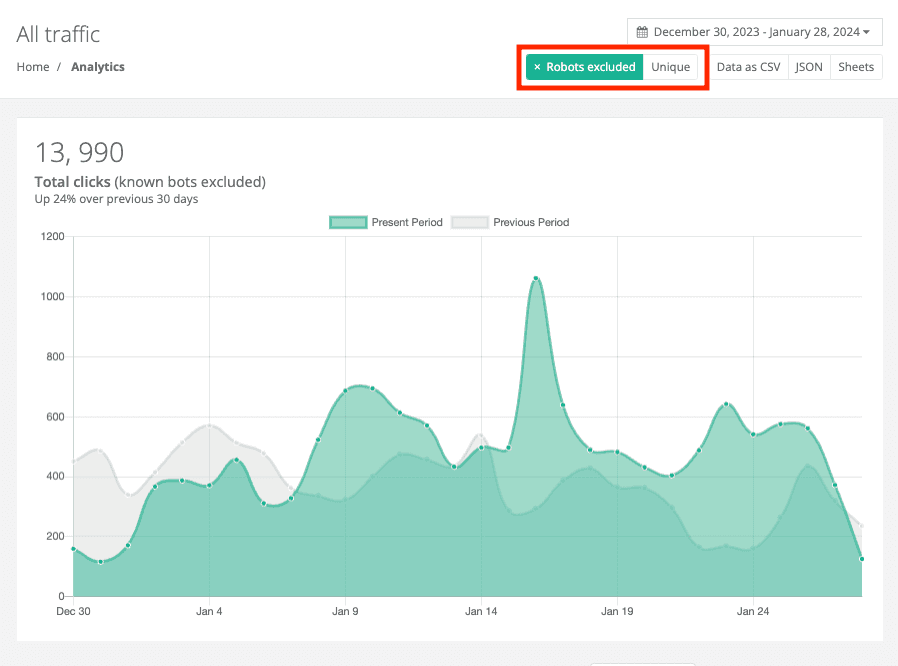

How to Filter Bots from Reports

Use the Filter robots button at the top right of your traffic reports to exclude bot traffic from your analytics.

Blocking Bot Traffic

You can optionally block bots from accessing your links entirely. However, we generally don't recommend this because:

- Good bots that check for spam may flag your link as suspicious if blocked

- Custom social previews won't work on most platforms

- Search engines won't be able to index your links

To block other bots while still allowing social media previews, enable both "Block bots & spiders" and "Skip social crawler tracking" on your link.

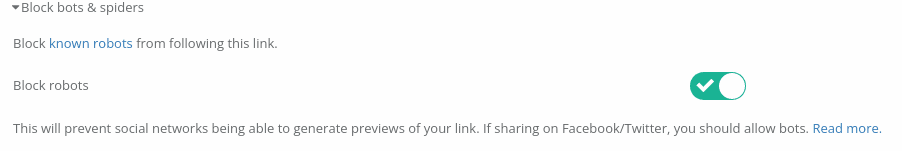

How to Block Bots

Create or edit a link

Under Block bots & spiders, enable Block robots

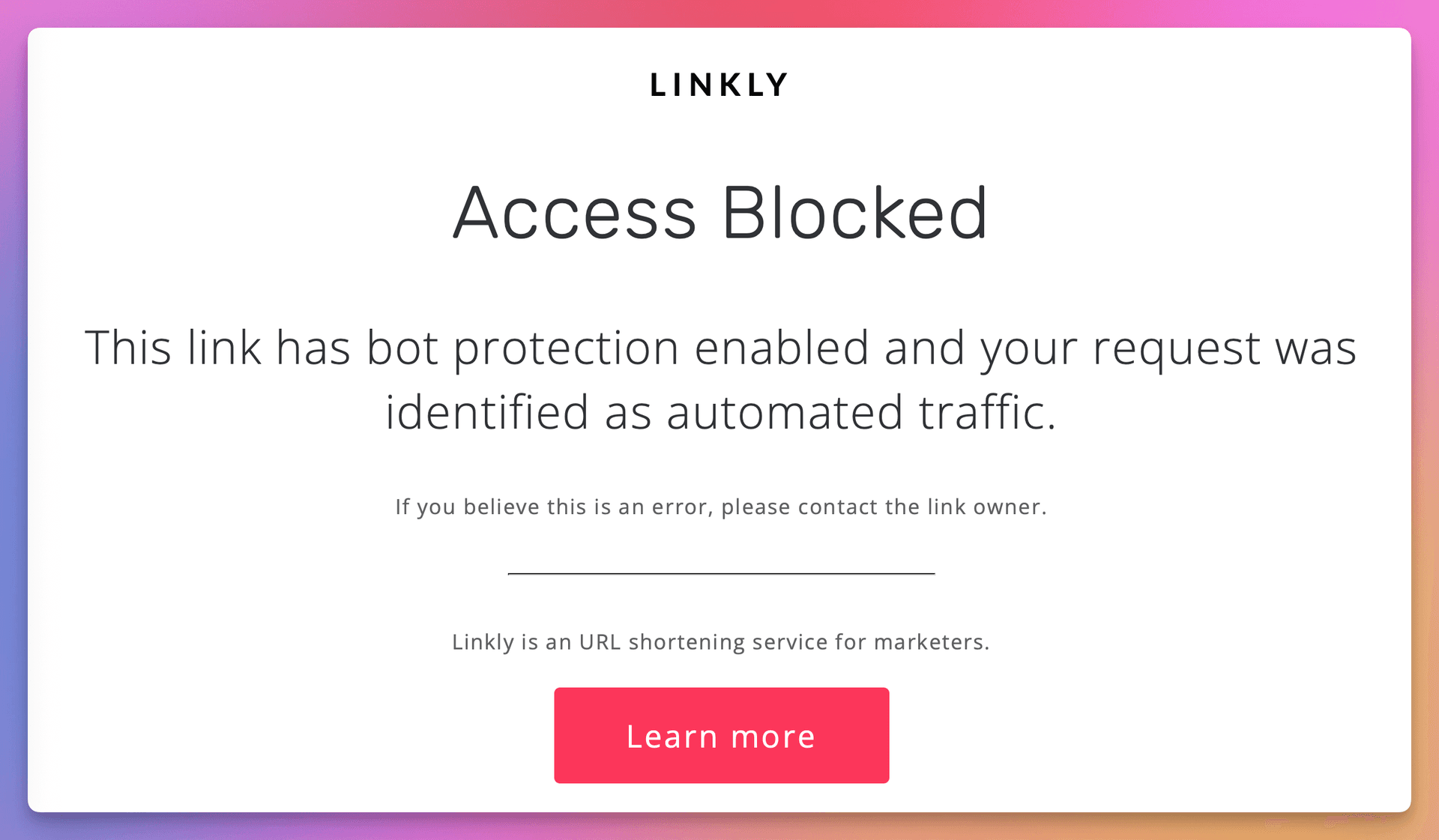

Blocked bots will see an Access Blocked page

The HTTP status code returned is 403 Forbidden.

Frequently Asked Questions

Why is my link getting bot traffic?

Bots visit links to generate previews, check for spam, and index content for search engines. This is normal and usually beneficial.

Should I block robots?

In general, no. Blocking robots can cause your links to be flagged as spam by social networks and email providers.

Only block bots if you have a specific reason, such as private internal links.

Why is most of my traffic from bots?

Links shared via social media, email, or SMS often generate significant bot traffic because these platforms actively scan links for spam and security threats.

As your human traffic grows, bots will represent a smaller percentage of total clicks.

Do bots count against my click limit?

Most bots do count against your limit, as it costs the same to process any request.

However, you can enable Skip social crawler tracking to exclude social media crawlers from Facebook, X, LinkedIn, Google, and YouTube from limits and analytics.

Blocked requests also don't count toward your limits.

Why don't I see these bots in Google Analytics?

Google Analytics relies on browser JavaScript to track visitors. Bots typically don't execute JavaScript, so they don't appear in GA reports.

Linkly records all traffic server-side, giving you complete visibility into both human and bot traffic.

How does Linkly know if a visitor is a bot?

Most legitimate bots identify themselves via their user agent string. See our list of detected bots.

For bots that don't identify themselves, we check if the request originates from a cloud hosting provider or data center, which indicates automated traffic.

Why is my link blocked when I use a VPN?

Many VPN services route traffic through data centers that Linkly identifies as potential bot sources.

If you need to test links from another country, ask someone with a regular residential internet connection to check it for you.

What about fake clicks from ad platforms?

Invalid traffic from advertising platforms like TikTok is a different issue from bot traffic. These clicks come from real mobile devices but may not represent genuine interest.

See our article on TikTok invalid traffic for more information.